I decided to look for a TensorFlow sample, as it can run either on GPU, or CPU. It is time to benchmark the difference between GPU and CPU processing. I now have access to the GPU from my Docker containers! \o/ Benchmarking Between GPU and CPU | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. This is done easily on Linux using the lspci util: The first step is to identify precisely the model of my graphical card. It is strongly recommended when dealing with machine learning, an important resource consuming task. Let's give it a try! Installing CUDA on HostĬUDA is a parallel computing platform allowing to use GPU for general purpose processing. GPUs on container would be the host container ones.

NVIDIA DOCKER FOR MAC DRIVERS

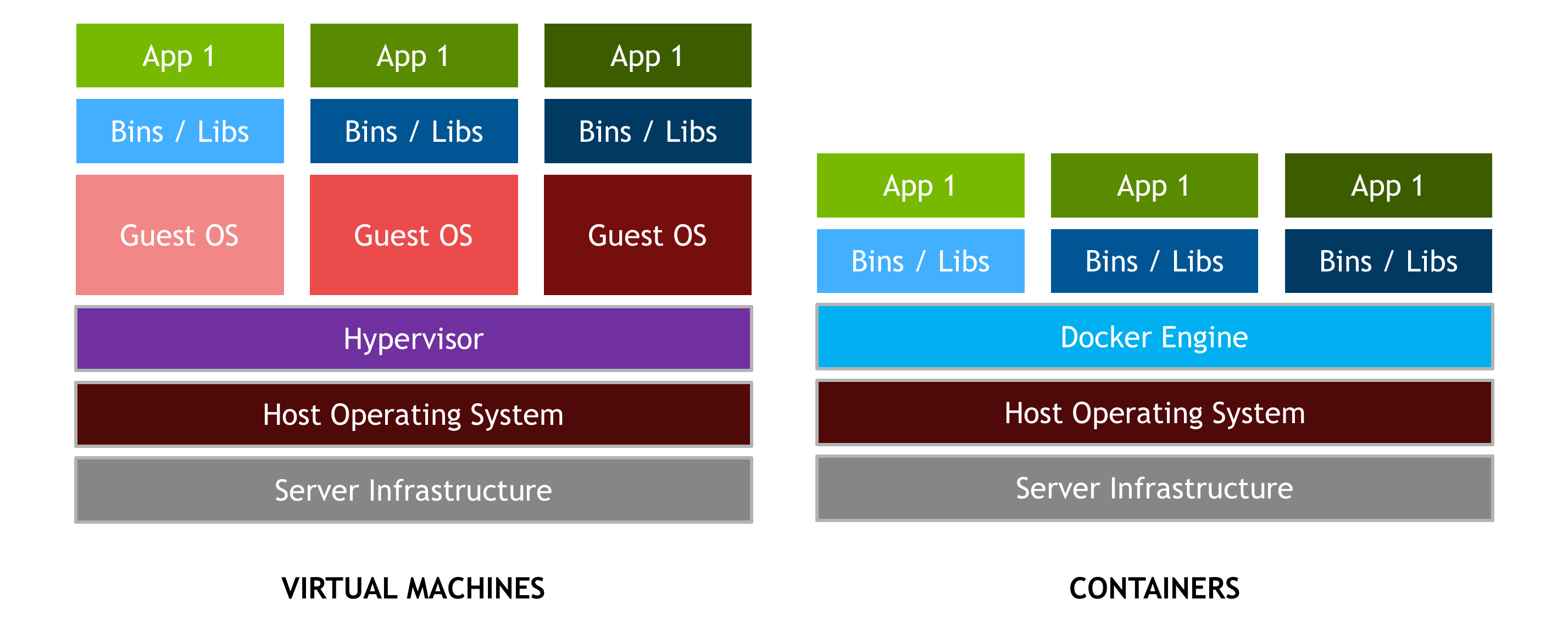

NVIDIA engineers found a way to share GPU drivers from host to containers, without having them installed on each container individually. Looking for an answer to this question leads me to the nvidia-docker repository, described in a concise and effective way as:īuild and run Docker containers leveraging NVIDIA GPUsįortunately, I have an NVIDIA graphic card on my laptop. However, as image processing generally requires a GPU for better performances, the first question is: can Docker handle GPUs?

It allows to setup easily even the most complex infrastructures, without polluting the local system.

I'm used to using Docker for all my projects at marmelab. This is the story of how I managed to do it, in about half a day. That means I have to configure Docker to use my GPU.

NVIDIA DOCKER FOR MAC INSTALL

But I'm reluctant to install new software stacks on my laptop - I prefer installing them in Docker containers, to avoid polluting other programs, and to be able to share the results with my coworkers. Diving into machine learning requires some computation power, mainly brought by GPUs.

0 kommentar(er)

0 kommentar(er)